6 tips for AI-assisted coding in science

AI tools are here to stay, and they can help you write code blazingly fast. But, you shouldn’t just “vibe-code” and copy and past AI-generated code until the results look fine. Why? Because:

- there might be errors in there that you don’t see

- the code will likely be very hard to understand and maintain

- the code won’t be re-usable

So, here are 6 tips that I have gathered when coding with an AI. In the end I will also provide another final take-away!

AI-assisted coding at the example of geospatial data science

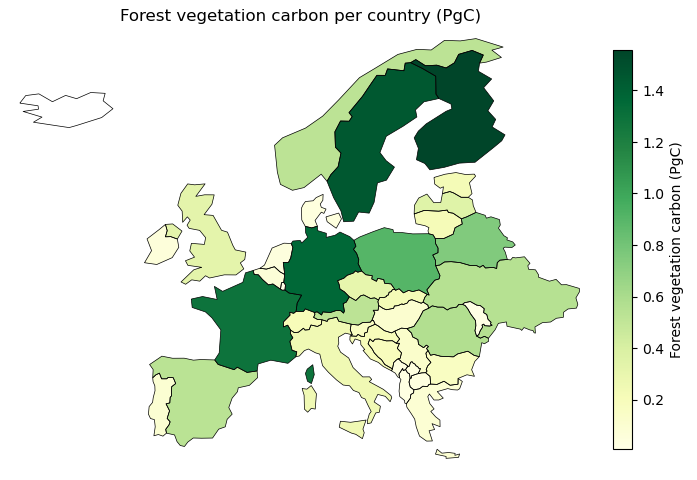

I will address these tips by going through the example of the image below, namely: using an output file of a forest model to plot the total carbon stored in each European country’s forests. This could have been a tedious job for an afternoon, but in the end took me no more than 5 minutes.

This is how the data looks that created the plot:

Lon Lat Year VegC

6.25 51.75 2001 0.941

6.25 51.75 2002 0.954

... ... ... ...

27.25 62.75 2001 5.302

... ... ... ...

So let’s go!!

1. Use the correct tool

Are you just writing scripts or are you maintaining a larger amounts of code? For smaller tasks, like analyzing and plotting datasets, using your go-to AI like ChatGPT or Gemini is good enough. But if you want the AI to know your entire project, you should use a different tool like Copilot, Claude, or Cursor. You can check for yourself which of these suit you best. For the example I want to show here, ChatGPT is enough as we do not need it to know any other code for the problem.

2. Code in an iterative way

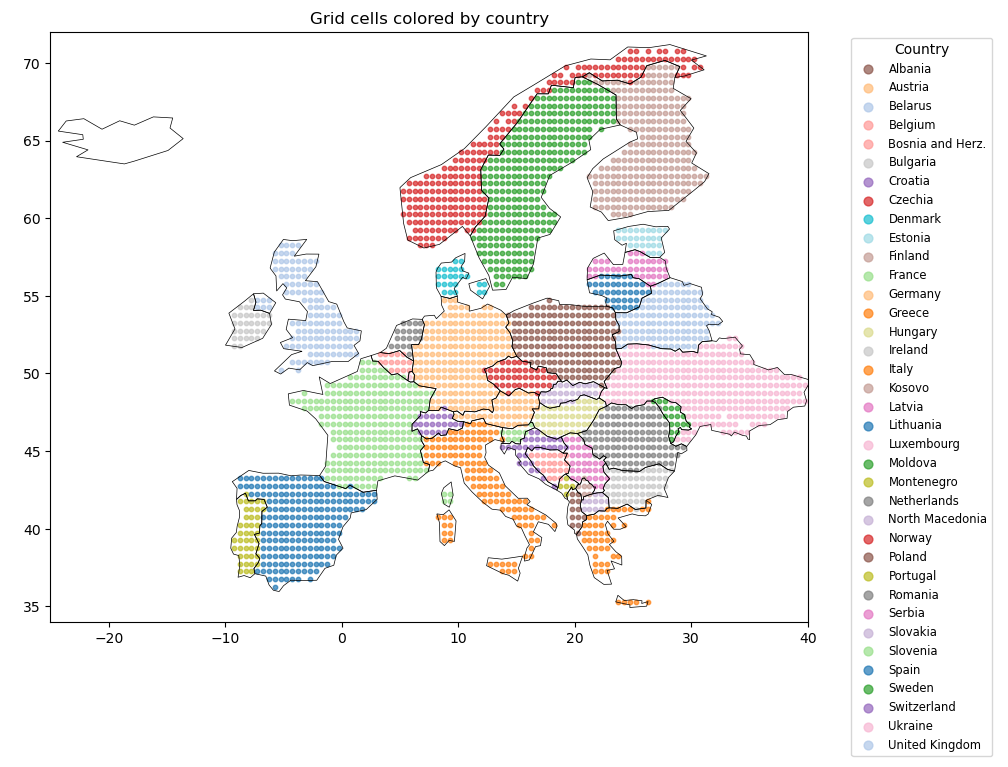

Don’t try to get the whole task done at once. Break it up into smaller pieces. Especially when working with notebooks, you can start by using the small pieces and checking whether they work correctly (see also point 4). In my example:

- Get country data and plot it

- Assign each row in the dataset the country in which it lies

- For each row in the data set, compute the area of its grid cell (depending on the geographic projection)

- Group the data by country and sum up

- Plot the result

3. Use thorough prompts and context

Prompt engineering is a key technique to get proper results by AIs. So, give it all the information you have, explain the datasets thoroughly, upload possible existing files or scripts. That way, the AI can make use of all this information to write better code. For instance like this:

I have a csv file,

cpool.csv, which is delimited by tabs. This file contains the columnsLon, Lat, Year, VegC. It has one row per grid cell and year at a 0.5 degree resolution and spans Europe’s land area. First, read in the file. Second, keep only those rows where the year is 2010, and drop theYearcolumn. Then, add a column called “country” to each row and assign it the name of the country that the grid cell lies in using the shapefilene_110m_admin_0_countries.shp.

4 Let the AI add tests and assertions

This is a key point. Don’t just copy-paste the code and hope for the best - CHECK IT! Assertions and tests offer perfect ways to check intermediate steps of the process, making sure that everything is correct. And the AI can help you write them.

4.1 Assertions

Assertions are short checks within the code, and you can use them to check whether intermediate steps are correct. Funnily, in this example, ChatGPT actually made an error by converting units! I detected it easily by letting it add assertions:

Please add an assertion checking that the total area of the data points sums up to about 6 million square kilometers!

The result was this, and it helped me find an error in ChatGPT’s code:

1

2

3

4

total_grid_area_km2 = gdf["area"].sum() / 1e6 # convert m² to km²

# Assert the total area is roughly 6 million km²

assert 5.9e6 <= total_grid_area_km2 <= 6.1e6, f"Total grid area seems to be incorrect: {total_grid_area_km2:.0f} km²"

When I ran the script again, the assertion failed, pointing me to an error in the code.

4.2 Tests

This might be a complicated part but I urge anyone to familiarize themselves with the concept of unit testing! Tests are a more rigorous way to check the correctness of the code. They are usually within another file, and have to be executed separately (unlike assertions, that run within the script we are writing).

In Python, however, you can have such tests also within the same file using the tool doctest.

Here is how it works:

From the script you generated, please extract the area computation into a function

compute_gridcell_area()that takes the latitude, and the size (in degrees) of a gridcell as an input and computes the gridcell area in meters. Please add a python doctest checking that this function works for size 0.5°, and an error margin of 1% using the following test cases: lat = 0° –> 3077274420 m lat = 45° –> 2190625912 m lat = 75° –> 806495859 m

The outcome is (with some adaptations by me):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

def compute_gridcell_area_meters(lat, size_deg=0.5, earth_radius=6371000):

"""

Using some values to test whether the script works correctly:

>>> abs(compute_gridcell_area_meters(0, 0.5) - 3077274420) / 3077274420 < 0.01

True

>>> abs(compute_gridcell_area_meters(45, 0.5) - 2190625912) / 2190625912 < 0.01

True

>>> abs(compute_gridcell_area_meters(75, 0.5) - 822206495859) / 806495859 < 0.01

True

"""

lat_rad = np.radians(lat)

dphi = size_deg * (np.pi / 180) * earth_radius # N–S extent

dlambda = size_deg * (np.pi / 180) * earth_radius * np.cos(lat_rad) # E–W extent

return dphi * dlambda

My IDE (developer environment, like Visual Studio, or PyCharm) can then execute these tests and tell me whether the code does what it’s supposed to do.

One critical thing here: you have to check that the test can also fail, for instance by changing some of the numbers. Then you can be sure that the test is actually doing something.

5. Clean up and make it tidy and understandable

When you or somebody else needs to get back to this code, it will be super hard to understand. That’s why you should rename variables and functions, extract pieces into new functions, remove duplications, and store magic numbers. For instance:

- Instead of calling the dataframe

df, call itforest_carbon - Instead of having one large file, extract functions like

compute_grid_cell_area() - Instead of using magic numbers like

9.81store them in constants:GRAVITATIONAL_CONSTANT = 9.81and use these.

For this topic, please see also my other post: variable naming and function extraction

Notably, AI can probably help you with cleaning up. You can even ask it for a code review and it will give you some tips:

Please give me a code review for the following script: …

Here is part of the response:

Magic numbers in conversions: You compute PgC as kgC / (1000 * 1000 * 1000 * 1000). That’s correct (1 Pg = 10¹⁵ g = 10¹² kg), but it’s error-prone. 👉 Better:

PgC = total_europe_kgC / 1e12 # since VegC is in kgC

You see that the response is not really good, but it does point you to the problem. So this is how I changed it:

Before:

1

print(f"Total vegetation carbon in Europe: {total_europe_kg / (1000*1000*1000*1000):.2f} PgC")

After:

1

2

CONVERSION_FACTOR_kg_TO_Pg = 1e12

print(f"Total vegetation carbon in Europe: {total_europe_kg / CONVERSION_FACTOR_kg_TO_Pg:.2f} PgC")

6. Use a new context for each problem

On ChatGPT etc., don’t keep a chat open where you keep dumping all the problems. For each problem, start a new chat. That way, you always have a context that contains all relevant information regarding your task, and nothing else. Generally, this is a key point for using AI: having well-designed contexts.

My final take-away

Think of the AI as a new intern that’s really good at coding. They can only do satisfactory work if you guide them well. And at the end, you need to look over whether they did a good job, and improve their first draft.

Hopefully this was helpful to you!

If you want to have a little laugh at AI, check out my chatGPT song on Youtube

Enjoy Reading This Article?

Here are some more articles you might like to read next: